CASA News

Issue Iss#

Day# Month# Year#

CASA News

Issue Iss# • Day# Month# Year#

Letter from the Lead

The Common Astronomy Software Applications (CASA) team has made changes in the last six months to improve the quality of our software and better align our development process with the needs of our user base. Our focus is on proper requirements documentation with verification testing that traces back to the written documentation. To that end, we have created a Science Development Lead position within the team to oversee requirements intake and transformation into actionable development plans. We have also formed a dedicated Verification Test team to create and maintain a suite of automated tests written against the advertised CASA documentation.

I am pleased to announce that Urvashi Rao Venkata (NRAO) has been appointed as the Science Development Lead within the CASA group and has been doing a fantastic job over the last development cycle. As this position touches many aspects of development within CASA, she has also been appointed as the Deputy CASA Group Lead.

More recently, Sandra Castro (ESO) was appointed as the Verification Team Lead, building on her background in CASA development and extensive experience in High Performance Computing testing. She is now leading a team of six within the CASA group, creating an independent verification test suite and improving the quality of our testing philosophy.

Lastly, I would like to announce that Juergen Ott has moved on to a new assignment within NRAO on the Very Large Array Sky Survey project after nearly 10 years as the CASA Project Scientist. We are grateful for his long and dedicated service in that very challenging position and wish him well in his new role. Jennifer Donovan Meyer has taken over as the new CASA Project Scientist after a long history leading the Validation testing effort.

I am excited to have Jennifer, Sandra, and Urvashi in these leadership roles on the CASA team and look forward to future development cycles with our new focus and structure in place.

CASA 5.6 Releases

Over the past months, the CASA team released a series of CASA 5.6 versions: CASA 5.6.0, 5.6.1, and 5.6.2. All three 5.6 versions are suited for manual data processing. CASA 5.6.1 fixed several bugs that were present in 5.6.0, has been scientifically validated for the Atacama Large Millimeter/submillimeter Array (ALMA), and is bundled with the ALMA Cycle 7 pipeline. CASA 5.6.2 includes the latest version of the pipeline that has been scientifically validated for the Very Large Array (VLA). It is good practice for general users to always use the latest CASA release for manual processing, regardless of whether or not a pipeline is included, unless otherwise recommended by ALMA or VLA.

The CASA 5.6 releases include the following highlights:

- Several new features are available for single dish processing, including the new task nrobeamaverage. See the Nobeyama Pipeline article in this CASA Newsletter for details.

- In tclean, a new version of the parameter ‘smallscalebias’ has been implemented for deconvolver = ‘mtmfs’ and ‘multiscale’, to more efficiently clean signal on different spatial scales. Please note that this implementation requires users to update their scripts where needed, to reflect changes in the value of the smallscalebias parameter. See CASA Memo #9 for details.

- A critical bug was fixed in tclean, where explicitly setting the restoringbeam parameters produced the wrong flux scaling of the residuals. See CASA Memo #10 for details.

- The task bandpass now supports relative frequency-dependent interpolation when applying bandpass tables.

- The task simobserve can now create multi-channel MeasurementSets from a component list.

Other highlights include updates to the fringefit, plotms, and statwt tasks, and the inclusion of new ALMA receiver temperatures and a new ATM library of atmospheric models.

The full release notes for the CASA 5.6 versions can be found in the CASA Docs documentation. The CASA 5.6 versions are available for download.

Performance & Reliability

In CASA 5.6, we also addressed a large number of memory leaks in the code, which should improve CASA performance. In addition, a new suite of verification tests were written to evaluate joint mosaicking and wideband imaging. These are steps in our continuous effort to make CASA more reliable, as requested by users in the 2018 CASA User Survey.

WARNING! We are starting to decommission the clean task. Although clean is still available in CASA 5.6, we urge all users to switch to tclean and update all personal data processing scripts. Please contact the Helpdesk if you need any help with the transition.

CASA 6: Modular Integration in Python

The initial release of CASA 6 is nearly ready for general use. CASA 6 has been reorganized to offer a modular approach, where users have the flexibility to build CASA tools and tasks in their Python environment.

This first release is targeted at developers and power users who build CASA into their own software applications and pipelines. The initial release includes the casatools, casatasks, and casampi modules, allowing for core data processing capabilities in parallel. The CASA 6 release is functionally equivalent to the tools, tasks, and mpi capabilities available in CASA 5.6.1; the modules pass the same verification and unit testing required from CASA 5.6, and no new features are available in this first release of CASA 6 relative to CASA 5.6.1. Consequently, they share the same documentation as CASA 5.6 with an additional page of CASA 6-specific installation and usage notes.

The initial CASA 6.0 Release is not intended for general users doing manual data reduction. It lacks much of the custom interactive shortcuts present in CASA 5 and many of the graphical user interfaces. Development continues on the data visualization packages, including casaplotms, casaviewer, and CARTA, as these involve external applications executing outside the python environment. An upcoming 6.1 release scheduled for the spring 2020 will contain a complete replacement for all CASA 5 components, including a single tar file distribution for those wishing to continue to install CASA in that manner.

The CASA 6 release announcement will be sent in the coming weeks to the CASA users email distribution in the same manner as previous CASA releases. The download location and instructions will be provided at that time.

Stepping Out of the Shadow: CASA’s role in EHT

Event Horizon Telescope Collaboration

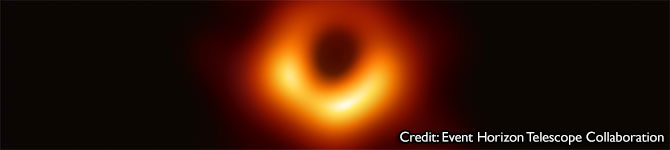

The supermassive black hole at the center of M87, as imaged by the Event Horizon Telescope. The image shows a bright ring, formed as light bends in the strong gravitational field.

[click to enlarge]

In spring 2019, the Event Horizon Telescope (EHT) Collaboration published the first image of a black hole shadow (see Figure). The image dominated newspaper and science journal headlines for weeks and has recently been awarded the Breakthrough Prize in Fundamental Physics. It may be less obvious that CASA played an important role in obtaining this result.

The calibration of the M87 observations by the EHT posed a unique and complex problem. The combination of very long baselines and high observing frequency pushed current technology and software to its limits, and sometimes beyond. In addition, the high scientific ambitions required detailed verification at every step in the calibration process. The entire process of calibration and error analysis is presented in EHT paper III.

To ensure that cross-validation was possible, the EHT decided in 2016 to develop three independent calibration pipelines, using HOPS (EHT pipeline: Blackburn et al. 2019), AIPS (Greissen 2003) and CASA (McMullin et al. 2007, EHT pipeline: Janssen et al. 2019). The CASA development was made possible thanks to a strong push from European partners who were joined in the BlackHoleCam project (ERC Synergy Grant 610058). Under this project, JIVE started the development of Very Long Baseline Interferometry (VLBI) tools and tasks in CASA, as described in an earlier Newsletter article and in the EVN Symposium 2018 (van Bemmel et al. 2019). This soon evolved into a close and productive collaboration with NRAO. At the Radboud University in Nijmegen the new tools and tasks were tested and implemented in the rPICARD pipeline (Janssen et al. 2019).

A key step in VLBI data processing is fringe fitting, which removes residual phase, delay, and rate errors that arise from, e.g., errors in the correlator model and atmospheric turbulence. The CASA task fringefit and the AIPS task FRING are both an implementation of the Schwab-Cotton algorithm (Schwab & Cotton 1983). The HOPS task fourfit has a mathematically different approach to fringe fitting (Rogers et al. 1995).

For EHT data, the independent pipelines handled fringe fitting and bandpass calibration. Additional calibration steps, such as amplitude and network calibration, were performed by identical post-processing scripts on the pipeline results. As a consequence, the fringe fitting step was the main source of potential differences between results. Jupyter notebooks were developed to analyse the solutions and data quality after fringe fitting and compare the three pipelines. Details of these verification tests and pipeline comparisons are described in Sections 7 and 8 in EHT paper III.

The quality of the M87 calibration in the CASA pipeline was equivalent to HOPS results, and generally out-performed AIPS. This is a truly remarkable achievement, as the development of VLBI processing in CASA and rPICARD was not even two years old at the time, competing with the decades-long track record of HOPS for specific EHT data processing and AIPS for VLBI in general. For the first science release of the EHT observations, HOPS provided the formal pipeline and the CASA and AIPS pipeline were marked as verification pipelines.

The rPICARD pipeline has since then evolved into a generic VLBI data processing pipeline, and can also be used to reconstruct images with the CASA multi-scale multi-frequency synthesis tclean task. It has successfully processed VLBA and EVN data. The main advantages of the pipeline are the high flexibility and modularity. Parameters are self-scaling, allowing even non-experts to process their data blindly and improve the calibration in a second run. Experts can set parameters by hand, if desired.

Since the first EHT data release, the pipeline has continued to develop and improve its performance. Within the EHT collaboration there is still need for cross-verification; currently rPICARD and the HOPS pipeline are the only two pipelines in operation. On a larger scale, the VLBI functionality in CASA is maturing, and was recently used to teach VLBI data processing to young radio astronomers at ERIS2019. The joint effort of JIVE and NRAO is continuing to implement new functionality and improve existing VLBI tasks.

References

Blackburn L., Chan C.-K., Crew G. B., et al., 2019, arXiv:1903.08832

Event Horizon Telescope Collaboration, 2019 ApJ Letters 875

Greisen E. W., 2003, Information Handling in Astronomy—Historical Vistas, Vol. 285 ed A. Heck (Dordrecht: Kluwer), 109

Janssen M., Goddi C., van Bemmel I.M., et al., 2019, A&A 626, A75

McMullin J. P., Waters B., Schiebel D., Young W. and Golap K., 2007, ASP Conf. Ser. 376, ADASS XVI ed R. A. Shaw, F. Hill and D. J. Bell, 127

Rogers A.E.E., Doeleman S.S., Moran J.M., 1995, AJ 109, 1391

Schwab, F.R., Cotton, W.D., 1983, Astronomical Journal, 88, 68

van Bemmel I.M., Small, D., Kettenis M., Szomoru A., Moellenbrock G., Janssen M., 2019, PoS(EVN2018)079

Simulating Terrestrial Planet Formation with a Next-Generation VLA

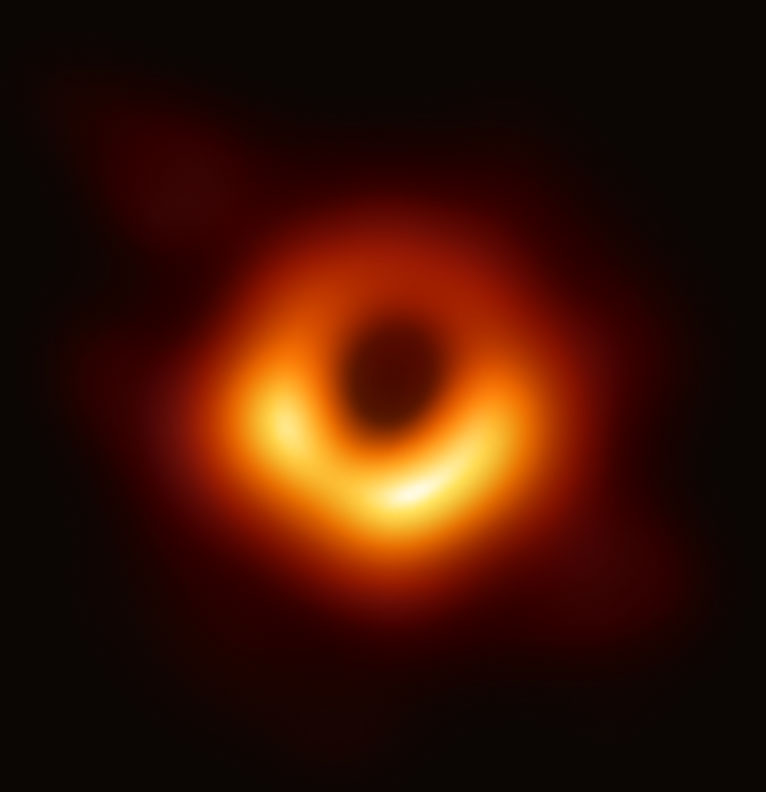

Figure 1: ngVLA & ALMA simulations for the disk model with a planet 3 AU from the central star and planet-star mass ratio of 1 MEarth/MSun at wavelengths of 1.25, 3, 7 & 10 mm, respectively. At d ~ 140 pc, the milli-arcsec ngVLA resolution corresponds to scales well below 1 AU & can probe terrestrial planet formation. See ngVLA Memo 68.

[click to enlarge]

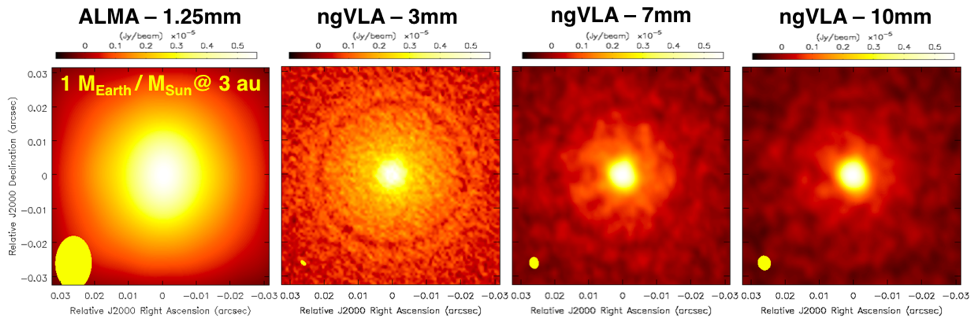

Figure 2: ngVLA simulation at 3mm of a dust-trapping vortex. The synthesized beam has been uv-tapered to 5 milli-arcsec resolution (~1 AU at 140 pc) to increase the surface brightness sensitivity. See ngVLA Memo 57.

[click to enlarge]

CASA simulations have played an integral role in establishing and assessing the capabilities for a next-generation Very Large Array (ngVLA). In a recent ngVLA Memo, new imaging simulations enable investigation of ngVLA capability to detect and resolve substructures due to planets in the dust emission of nearby disks. The input of global 2-D hydrodynamical planet-disk simulations account for the dynamics of gas and dust in a disk with an embedded planet. The primary goal was to determine whether the ngVLA will be capable of detecting planets down to a single Earth Mass, which is found to be true (see Figure 1).

Using CASA, the investigators found that terrestrial planets at orbital radii of 1−3 astronomical units (AU) can leave imprints in the dust continuum emission (e.g., see Figure 2). The ngVLA would be able to detect such dust features in ∼ 5 − 10 hours of integration time. Deeper observations with longer integration (> 20 hours) would further enable the ability to characterize the radial structure of the main rings / gaps, and also detect emission from azimuthal structures close to the planet, as expected from physical models of the planet-disk interaction in disks with low viscosity.

CASA imaging and simulation capabilities have been used in a wide variety of ngVLA design studies, including studies of:

A CASA Guide has been developed to assist users interested in carrying out ngVLA simulations, along with a description of the repository of scientifically motivated reference models for array configurations in the CASA distribution.

CASA Pipelines

The Pipeline Team

[click to enlarge]

The Pipeline team is pleased to announce two recent releases, both of which are available for download. The ALMA Pipeline release, updated to support Cycle 7 operations was delivered to the Joint ALMA Observatory in mid-September (casa-pipeline-release-5.6.1-8). The Cycle 7 Pipeline release continues to increase the scientific impact of ALMA. In the interferometry pipeline, spectral scans and multi-target, multi-spectral specification projects are now supported if the calibrators are the same for all spectral specifications. Observations of ephemeris sources are for the first time fully supported in both the interferometry and single dish pipelines. This release improved processing efficiency by reducing memory usage in mosaic tclean and by increasing the use of parallel processing in the single dish pipeline. Improved readability of the weblog and updated plots were also a focus of this release.

A second pipeline release to support VLA standard PI processing and Science Ready Data Products (SRDP) was made available in early November (casa-pipeline-release-5.6.2-2). For SRDP, the new pipeline enables downloads of calibrated visibility data for ALMA and the VLA, as well as user-driven re-imaging of ALMA cubes. Downloads of calibrated visibility data are achieved through a pipeline-automated “restore” of calibration tables applied to uncalibrated MeasurementSets. For re-imaging, users can selectively image a portion of their observation with filters such as spectral window, starting velocity, channel width and number of channels. Both of these SRDP features are presented via the new NRAO Archive website.

The Pipeline team wrapped up the month of October with a face-to-face meeting in Charlottesville. We reviewed our previous activity, heard from our ALMA, VLA, and SRDP stakeholders and began to map out a prioritized plan for the year ahead. Stay tuned!

Nobeyama Pipeline

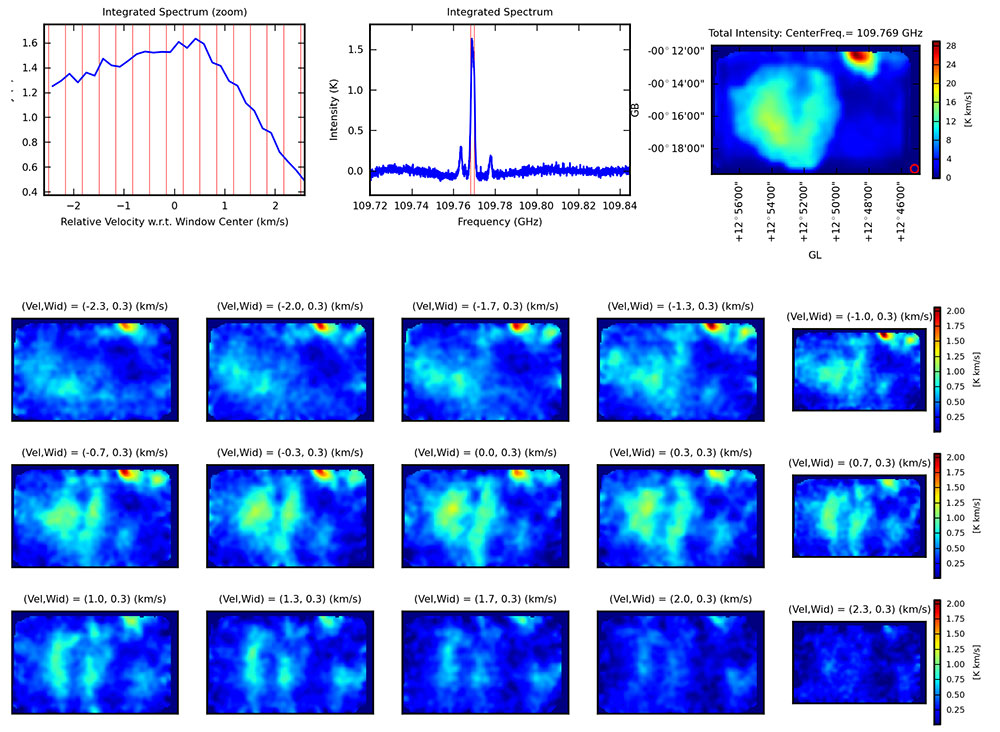

Image file generated by the Nobeyama Pipeline. [Top Right] Integrated intensity map of the entire observed region. [Top Middle] Integrated spectrum. Red vertical lines indicate the frequency range for the channel map shown at the bottom. [Top Left] Enlarged view of the channel map range along a velocity axis. Each column divided by red vertical lines corresponds to individual panels in the channel map. [Bottom] Channel map.

[click to enlarge]

The ALMA single dish pipeline has been developed on top of the single dish data reduction capability of CASA. It has been used for data reduction of ALMA Total Power Array data since Cycle 3, and the development team just completed a new release for Cycle 7 observing.

In parallel with development for ALMA, we also have been working on the Nobeyama Pipeline, a project to adapt the ALMA single dish pipeline to data acquired with the Nobeyama Radio Observatory 45m (NRO45m) telescope. We have been working in close collaboration with NRO staff since 2016. These efforts are expected to soon bear fruit in the form of the first release of the Nobeyama Pipeline later this year.

Despite its name, the project requires significant contribution from outside the pipeline development team. Indeed, the project started with the development of an application based on CASA's C++ library. Although CASA has a capability to import NRO45m data, NRO decided to develop a new standalone application to merge raw data from NRO45m into a MeasurementSet (MS). The framework adopted by this application was originally dedicated to import tasks for single dish data, importasap and importnro, requiring collaboration with the CASA single dish development team.

Once we processed a valid MS from NRO45m data, we made the pipeline capable of processing it. Since the ALMA single dish pipeline can work on any telescope's data with minor tweaks once the data is available in the form of MS, most pipeline tasks can handle NRO45m data directly. The importdata, exportdata, and restoredata tasks are the only exceptions. These three tasks are prefixed with hsdn, in contrast to other single dish tasks prefixed with hsd, to indicate that they are specialized to NRO45m. The hsdn_importdata task handles behavior of the pipeline specific to NRO45m data. Also, the pipeline products exported by hsdn_exportdata task were derived from the requirements from NRO. Finally, the hsdn_restoredata task has an option to accept relative gain correction information that is supposed to be given by the users. We also defined a "recipe", which describes an overall workflow, for the Nobeyama Pipeline. General structure of the Nobeyama recipe is basically the same as the ALMA recipe. The Nobeyama recipe just omits some ALMA specific tasks and replaces generic importdata/exportdata tasks with those prefixed with hsdn. Regarding input/output, the development team and scientists from NRO polished up the contents via several versions of prototypes.

As a result of our four-year effort, we were able to run the Nobeyama Pipeline through to completion and obtained the intended output. Figure 1 shows a sample of the pipeline output. This demonstrates that the Nobeyama Pipeline is capable of processing the NRO45m data. By carefully examining the pipeline result obtained from several test data samples, we decided to make the initial release this year. This will be an important milestone, but not our final goal. We will further improve and optimize the Nobeyama Pipeline functionality. We also intend to collaborate with the Nobeyama Science Data Archive. Nobeyama Pipeline products and raw data will be stored in the Archive. The pipeline output is designed so that the user can easily restore or re-process with a parameter tuning and/or supplementary data. Also, we consider displaying some images extracted from the pipeline product in response to the user's query to the archive.

CARTA 1.2

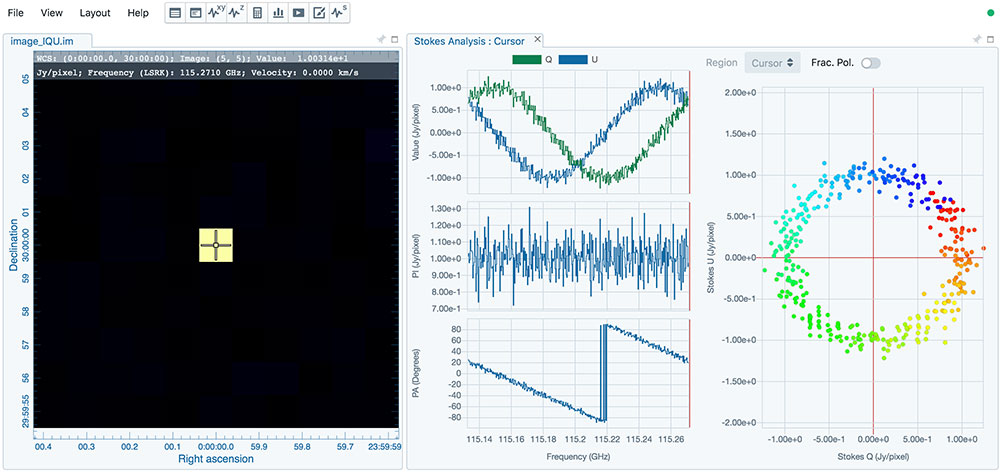

Image of the Stokes widget.

[click to enlarge]

The Cube Analysis and Rendering Tool for Astronomy (CARTA) has made significant progress recently, including the release of a new version, v1.2.

The CARTA project puts emphasis on performance, with tile rendering added to v.1.2 for resource optimization. Terabyte sized image cubes can be loaded into CARTA in seconds. The performance requirements are mainly driven by large survey data that will be made available on remote data servers at the Institute for Data Intensive Astronomy (their HDF5 image support has now been added to CARTA), NRAO, ALMA, and elsewhere. Local, browser-based clients then connect from anywhere without the need to download the data to a desktop.

Version 1.2. also includes support for regions of interest. Regions are based on the CASA "Region Text Format" (CRTF), with a plan to include ds9 regions at a later stage. In the current version, CARTA supports rotated boxes and ellipses, as well as the creation of points and polygons that can be created and modified in CARTA and im/exported from/to CRTF files. Region statistics and spectra can be obtained for all regions separately.

A unique new feature is the Stokes analysis widget that allows users to view basic polarization quantities of multi-channel, multi-Stokes cubes. Axes that can be displayed in various ways are the Stokes Q and U values and the derived quantities of polarization angle and fractional polarization (see Figure).

For convenience, we also introduced options for user-defined preferences, layouts, and performance settings.

For a full list of new features, downloads, user manual, and user help, please visit the CARTA home page.

A major, next release goal is the introduction of world coordinate system matching, multi-image overlay support including contour images, and displays of catalogs.

Career Opportunities

The CASA team is growing! We are currently looking for qualified Software Engineers to join the CASA development team at NRAO. Our prospective colleagues will help with the design, development, and maintenance of the code base for cutting-edge radio interferometer data processing and visualization. These positions are located at either the NRAO headquarters (ALMA North American Science Cener) in Charlottesville, Virginia USA, or the VLA Array Operations Center in Socorro, New Mexico USA.

Please refer to the following job announcement:

Very soon we hope to post two additional CASA job openings on the above website, so stay tuned!